Etl Patterns

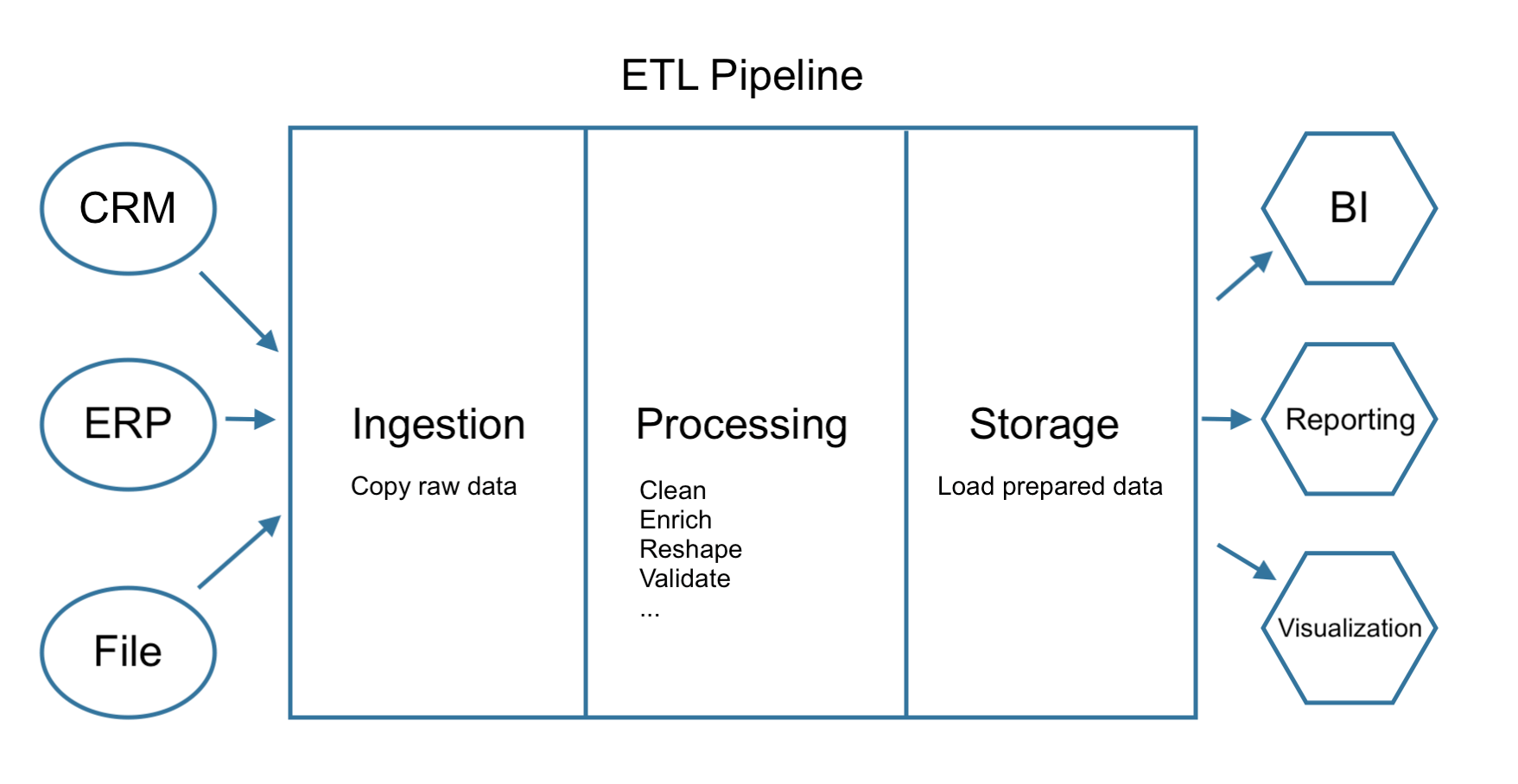

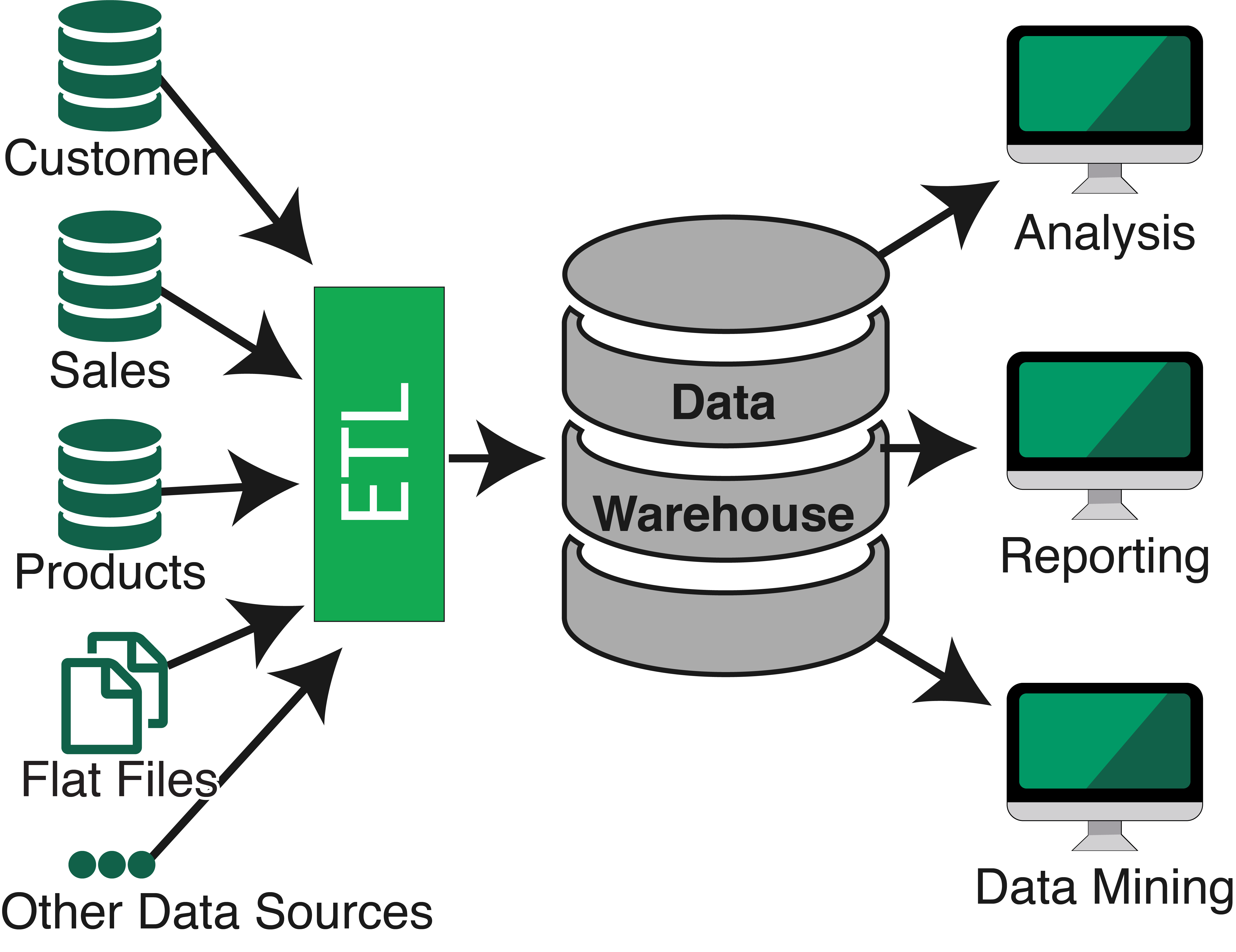

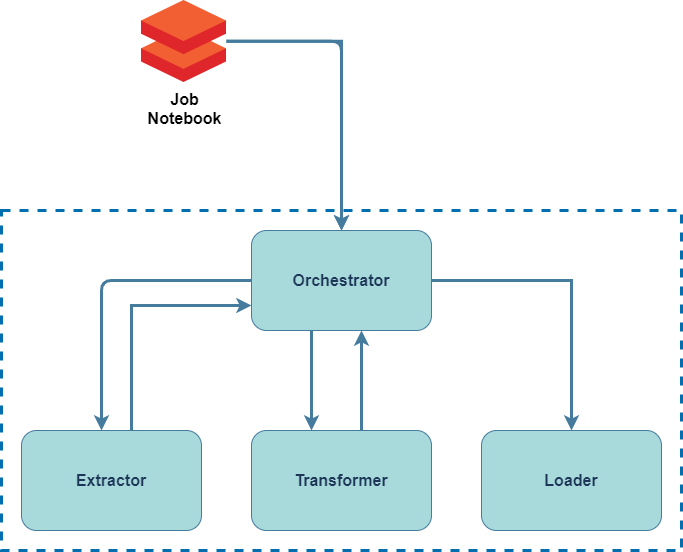

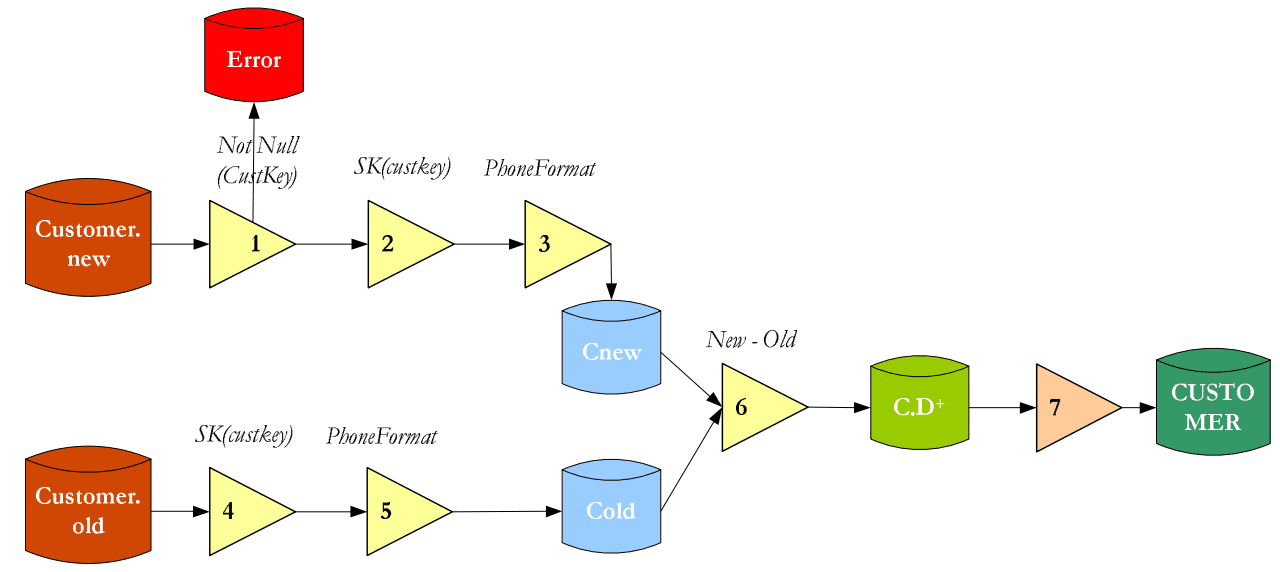

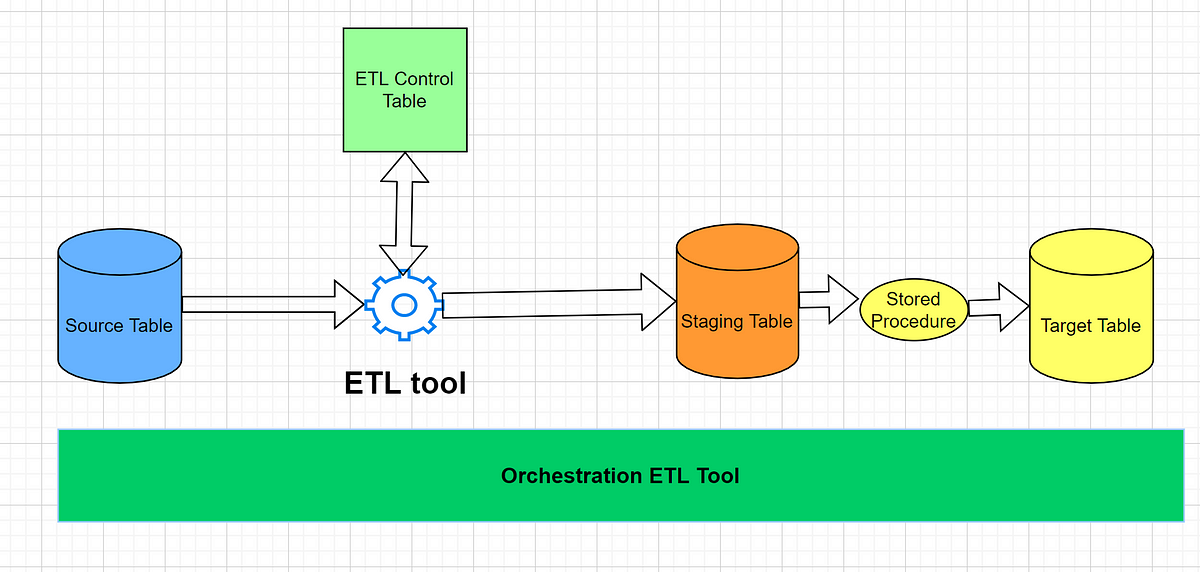

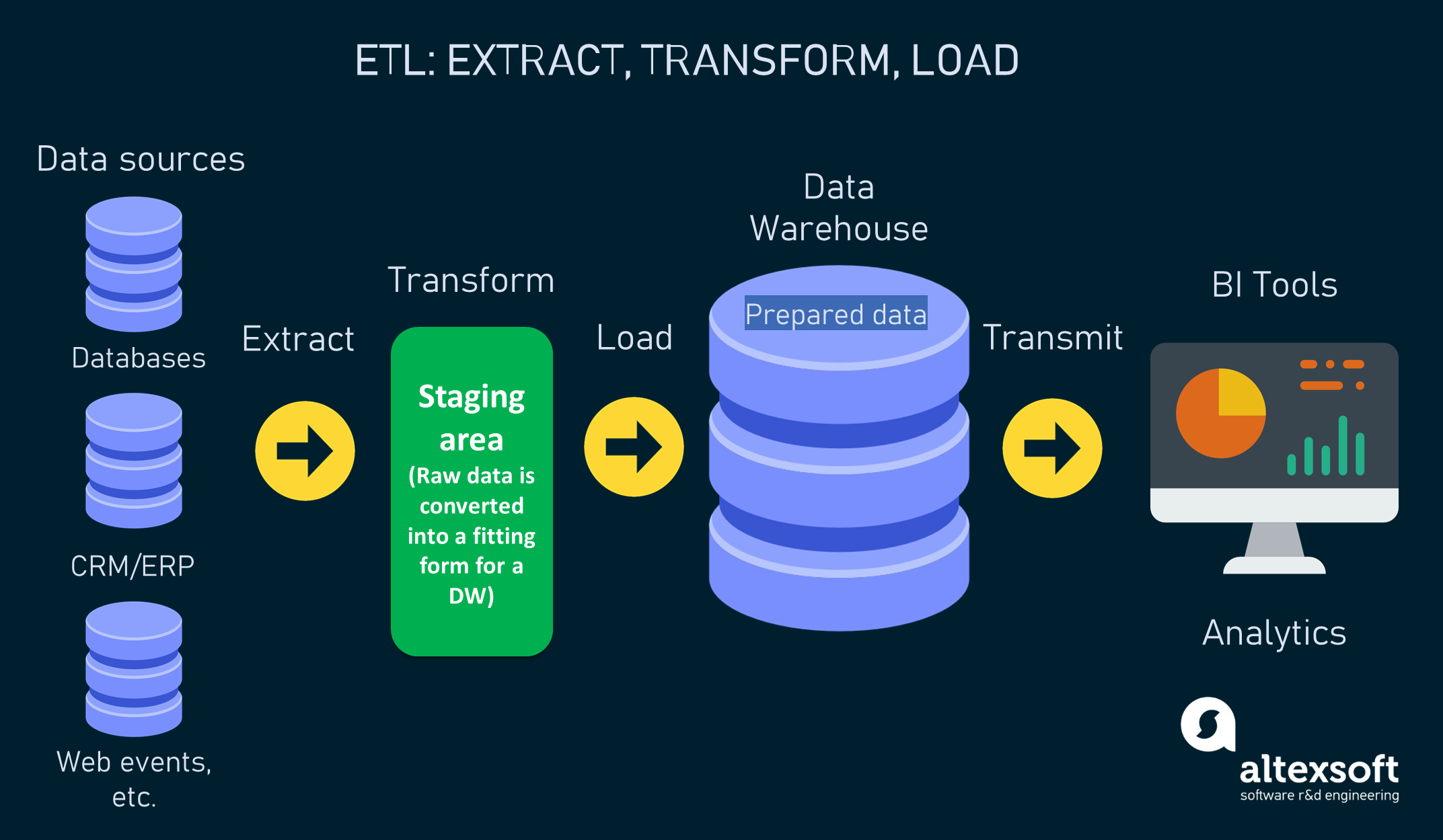

Etl Patterns - From the early 1990’s it was the de facto standard to integrate data into a data warehouse, and it continues to be a common pattern for data warehousing, data lakes, operational data stores, and master data hubs. It then transforms the data according to business rules, and it loads the data into a destination data store. Proposed design pattern for writing etl data pipeline code (mlops). From simple to complex extract and load pattern. Web extract, transform, and load (etl) process. Web etl—meaning extract, transform, load—is a data integration process that combines, cleans and organizes data from multiple sources into a single, consistent data set for storage in a data warehouse, data lake or other target system. Web however, the design patterns below are applicable to processes run on any architecture using most any etl tool. You can address specific business intelligence needs through. Data warehouses provide organizations with a knowledgebase that is relied upon by decision makers. Etl tools work in concert with a data platform and. Etl data pipelines provide the foundation for data analytics and machine learning workstreams. At a high level, etl jobs comprise the following three steps: Batch processing is by far the most prevalent technique to perform etl tasks, because it is the fastest, and what most modern data applications. It requires a thorough understanding of best practices, etl design patterns, and. Proposed design pattern for writing etl data pipeline code (mlops). The extract and load pattern is a straightforward etl design approach suitable for simple data integration scenarios. The data can be collated from one or more sources and it can also be output to one or more destinations. In this case, “etl pipelines centered on an edw (enterprise data warehouse)”.. Loading the processed data into another data source; You can address specific business intelligence needs through. Use this framework to reduce debugging time, increase testability and in multi environment productions. This extraction, and subsequent transformations, are often done using an etl tool such as sql server integration services. At a high level, etl jobs comprise the following three steps: Loading the processed data into another data source; Web extract, transform, and load (etl) is the process of combining data from multiple sources into a large, central repository called a data warehouse. In this case, “etl pipelines centered on an edw (enterprise data warehouse)”. Web extract, transform, and load (etl) process. By aaron segesman, solution architect, matillion. Use this framework to reduce debugging time, increase testability and in multi environment productions. The transformation work in etl takes place in a specialized engine, and it often involves using. From simple to complex extract and load pattern. Etl pipelines centered on an edw (enterprise data warehouse) there is no limit to the number of ways you can design a. Etl processing is typically executed. From simple to complex extract and load pattern. You can address specific business intelligence needs through. Web etl design patterns: The transformation work in etl takes place in a specialized engine, and it often involves using. Web etl design patterns: This pattern is often used. Extract, transform, and load (etl) is a data pipeline used to collect data from various sources. If we use cdc tools it can reflect the latest changes from the source database to target in minutes or seconds. Web however, the design patterns below are applicable to processes run on any architecture. It requires a thorough understanding of best practices, etl design patterns, and use cases to ensure accuracy and efficiency in the process. This extraction, and subsequent transformations, are often done using an etl tool such as sql server integration services. Web the etl pattern provides a clear separation of concerns, allowing data engineers to focus on each step individually. However,. Related article better extract/transform/load (etl) practices in data warehousing (part 2 of 2) By aaron segesman, solution architect, matillion. Etl pipelines centered on an edw (enterprise data warehouse) there is no limit to the number of ways you can design a data architecture. Part 1, discussed common customer use cases and design best practices for building elt and etl data. First, some housekeeping with batch processing comes numerous best practices, which i’ll address here and there, but only as they pertain to the pattern. Mastering etl is essential for successful data integration. At a high level, etl jobs comprise the following three steps: Etl data pipelines provide the foundation for data analytics and machine learning workstreams. Etl uses a set. Related article better extract/transform/load (etl) practices in data warehousing (part 2 of 2) In this case, “etl pipelines centered on an edw (enterprise data warehouse)”. Etl uses a set of business rules to clean and organize raw data and prepare it for storage, data analytics, and machine learning (ml). At a high level, etl jobs comprise the following three steps: If we use cdc tools it can reflect the latest changes from the source database to target in minutes or seconds. From simple to complex extract and load pattern. This pattern is often used. Web the first pattern is etl, which transforms the data before it is loaded into the data warehouse. Web the impact of healthcare data usage on people’s lives lies at the heart of why data governance in healthcare is so crucial.in healthcare, managing the accuracy, quality and integrity of data is the focus of data governance. Etl pipelines centered on an edw (enterprise data warehouse) there is no limit to the number of ways you can design a data architecture. It involves extracting data from one or more sources and directly loading it into the target system without any transformation. Web extract, transform, and load (etl) process. The data can be collated from one or more sources and it can also be output to one or more destinations. Web compared to batch etl jobs that keep the target database updated at most every 4 hours. This post presents a design pattern that forms the. Use this framework to reduce debugging time, increase testability and in multi environment productions.

Database Lifecycle Management for ETL Systems Simple Talk

Deconstructing "The EventBridge ETL" CDK Pattern

ETL Architecture A Fit for Your Data Pipeline? Coupler.io Blog

Reducing the Need for ETL with MongoDB Charts MongoDB Blog

Orchestrated ETL Design Pattern for Apache Spark and Databricks

ETL Workflow Modeling

ETL Best Practices. ETL Design Patterns. Что такое ETL?

Matillion ETL Error Handling Patterns

Beginner’s Guide Extract Transform Load (ETL) Playbook Incremental

GitHub immanuvelprathap/ETLSales_Analysis_ReportMySQLPowerBI

Web However, The Design Patterns Below Are Applicable To Processes Run On Any Architecture Using Most Any Etl Tool.

This Pattern Also Enables Parallel Processing, As Each Step Can Be Performed Independently And In Parallel.

Extracting Data From One Data Source;

Web Designing An Etl Design Pattern.

Related Post: